Might I suggest…

In the world of search-based platforms, getting the right information into users’ hands at the right time has always been the name of the game. And with technologies like machine learning (ML) and artificial intelligence (AI) on the rise, serving up relevant content to users has never been more top-of-mind for businesses—or more effective for building differentiation. But how can product leaders accurately measure—and ultimately improve—the efficacy of ML / AI in use cases like these?

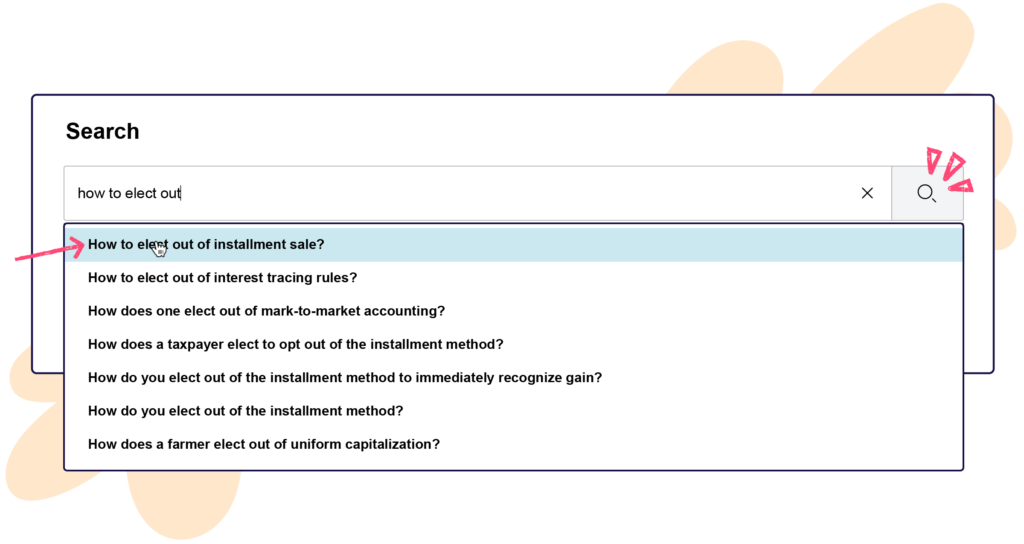

Thomson Reuters’ entire platform is built on the power of their search functionality. Their Checkpoint Edge product allows tax and accounting professionals to conduct research and find the information they need quickly and efficiently. “As a search platform, we have a search box where users enter their terminology,” said Vinay Shukla, former product owner at Thomson Reuters. “We also have a feature called our ‘auto suggest box’ that drops down after the user starts entering their [search] terms. We developed AI capabilities to start suggesting recommended searches based off of the user’s entry.”

Shukla and his team needed a way to measure how well this auto suggest feature was performing. And he also wanted to be able to hone in on the behavioral and psychological aspects of how users were engaging with the product’s search feature. “[We wanted to understand things like] when a user is stopping their entry or their keystrokes, or when a user is going in and modifying a query that we’re suggesting,” explained Shukla. “That kind of information is really great for the future maintenance of our AI / ML capabilities, which require a lot of that explicit and implicit data.”

Query the queries

Shukla and his team turned to track events in Pendo to capture three different key criteria in a user’s search process: (1) a user’s first inputs, upon which they stop entering data; (2) whether or not the user selected something from the auto suggest dropdown queries; and (3) the user’s final search. “With these three pieces of information, we’re starting to open up a huge world of analyzing whether a user is modifying their query—and what they put in their query,” Shukla explained.

He noted that this three-fold tracking approach allows his team to dig deeper into user behaviors to ultimately inform and improve the performance of their ML / AI algorithms. “Did they stop typing? Did they notice that their question was already there for them to search? [Track events] essentially give us a view into how well the auto suggest [feature] is performing, and also allow us to start to see how much information the auto suggest AI needed in order to start popping up suggested questions,” said Shukla.

Shukla noted that tracking events in Pendo has been a big upgrade from their previous methodology, which stopped short of tracking the moment at which a user’s search gets acted on. “[Having Pendo] has been brilliant because non-technical folks can actually pull the data down from the Pendo dashboard and create pivots off of it. Really quick analysis can now be done by non-data scientists,” he said.

The ability to track these events and understand user engagement has also been hugely beneficial for Thomson Reuters’ editorial team. “We have editors that write content for our platform in-house,” Shukla explained. “They’ve been greatly empowered to analyze and take a look at what types of queries users are putting in. If they actually helped to curate some of those queries, they’re now able to see feedback on what alternatives users are searching for as well. So there’s been a lot of empowerment through this particular track event.”

Shukla and his team now leverage these tracked events to measure success in—and improve the functionality of—the Checkpoint Edge product. “If a user selects content from the dropdown, then that feature is a success,” he explained. “It has created a lot of opportunities for us to analyze the types of queries our users are actually inputting versus what they might have seen in the dropdown. It’s a chance for us to figure out if we need to enhance the feature or take things back to the drawing board if queries are serving up irrelevant information.”

Correlating user feedback with analytics has also added a deeper layer of context to how Shukla’s team measures the auto suggest feature’s success. By creating a segment of users who’ve completed a specific tracked event, then generating an Net Promoter Score (NPS) report of those users and comparing it to a segment that has not engaged with the tracked event, he is better able to understand the impact the feature has on user sentiment. “It was really important for us to see the trends of how people were reacting in terms of NPS with this feature,” Shukla explained.

Finally, Shukla noted that the ease of data extraction from Pendo has been a huge win. “The biggest benefit is being able to put that raw data in front of the people doing the work,” he said. “It doesn’t take a lot of effort or a huge learning curve to pull the data down and filter on queries that are hot in the market right now—especially when it comes to knowing what our users are searching for.”